New harddrive: WD6000HLHX

Posted on September 10, 2010 with tags . See the previous or next posts.

While SSDs are all the rage nowadays (and for good reason), I don’t trust them yet for my “important” storage, as I’m not familiar with their failure behaviour. So when one of my hard-drives recently started misbehaving, I bought the new WD Raptor model. Since some people might be interested in how it performs in some synthetic tests under Linux, here are some fio results.

Now, I admit that 10K RPM for desktop is a “want” versus “need” discussion, but nevertheless, these hard-drives are (for their capacity) quite affordable, compared to SSDs, and faster than a regular 7200 RPM drive. So:

# smartctl -i /dev/…

…

Device Model: WDC WD6000HLHX-01JJPV0

Serial Number: WD-WXA1C20U0627

Firmware Version: 04.05G04

User Capacity: 600,127,266,816 bytesThe older hard-drive I’m comparing to is also a WD Raptor, the

WD3000GLFS model (yes, I’m a sucker for shiny hardware).

A few of the SMART capabilities seems to have changed (e.g. a new Phy Event Counter has been added, CRC errors within host-to-device FIS,

and the SCT Status Version is now 3 instead of 2, the Temperature History Size is now 478 instead of 128), but nothing significantly

different that I can see.

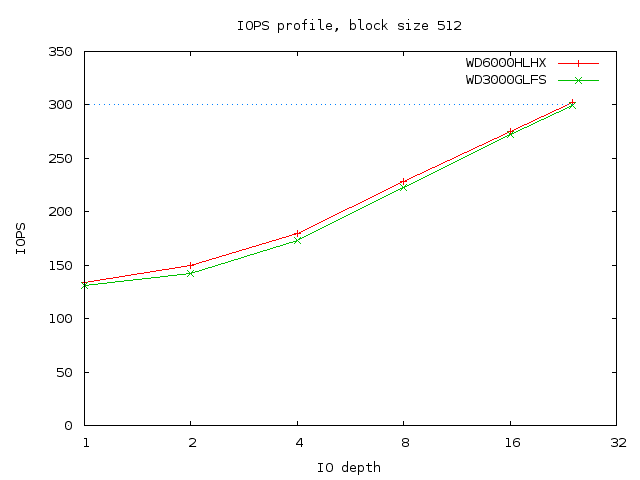

First, the IOPS test: nothing changed here. To be honest, even the WD3000 model had pretty good performance. The fio job definition is:

[global]

filename=${DRIVE}

iodepth=${IODEPTH}

bs=${BS}

ramp_time=10

runtime=120

[rate-iops]

rw=randread

ioscheduler=noop

ioengine=libaio

direct=1I did tests for block sizes of 512 and 4096 bytes (but the results were so close that I only used the 512 bytes ones), and at queue depths of 1, 2, 4, 8, 16 and 24 (not 32 as AFAIK the SATA protocol only allows a max queue depth of 31, and I wanted to test only the drive’s queue).

So:

As I said, nothing really changed, but starting at 130 IOPS and reaching more than 200 IOPS at queue depth 8 (which again, is not so much used at home) is nice.

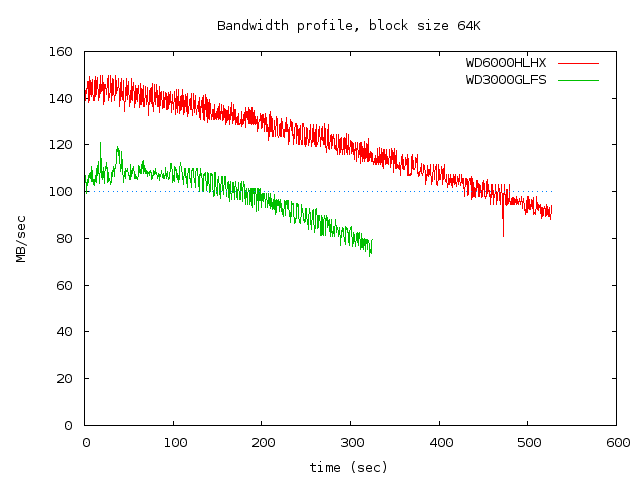

Next, the bandwidth tests. This uses the standard disk-zone-profile

job shipped with fio:

[global]

bs=64k

direct=1

rw=read

ioengine=libaio

iodepth=2

zonesize=256m

zoneskip=2g

write_bw_log

[disk_zoning]

filename=${DRIVE}Here the changes are significant. Whereas the previous generation topped at around 110MB/s, the new model tops at over 140MB/s:

I’m not sure what makes the old model vary so much at the start of the test (I repeated the measurement twice, and it looks the same), but in any case, there a few conclusions to be drawn:

- the new model is fast; the logs tell me that in the first 30 entries (zones), there are 10 entries with speeds of over 150000 KB/s (147MB/s)

- even though the capacity has been doubled, the time to read the entire drive (in zones) has only increased by ~62%, which is good

- I’ll need more than Gigabit Ethernet to be able saturate just one of these drives in my storage box; even at the slowest, the drive is over 90MB/s

So, I can say I’m pretty happy. The 300GB model was a tiny bit too small for me, but the new size is great, and it seems the sequential read/write speed is also good. Let’s see how long they will last though, HDD failures are (unwanted) friends of mine…